· 15 min read

How Enemy AI Works In Dicey Dungeons

Terry Cavanagh

Lead Developer behind Dicey Dungeons

Editor’s Note: this post was originally published by Terry Cavanagh, indie game designer currently working on Dicey Dungeons. To stay up to date with what Terry is currently working on, your can find him on Twitter, or follow his other projects on his website here.

For the past month or so, I’ve been tackling one of the biggest technical problems in my new game, Dicey Dungeons – improving the enemy AI enough for the final release of the game. It’s been pretty interesting, and lots of it was new to me, so I thought I’d write a little bit about it.

First up, a sort of disclaimer: I’m not a computer scientist – I’m just one of those people who learned enough about programming to make video games, and then stopped learning anything I didn’t have to learn. I can usually muddle through, but a real programmer probably wouldn’t have approached all this the way I did.

I tried to write all this in a fairly high level approach in mind, so that hopefully the basic ideas all make sense to other non-programmers. But I’m for sure no expert on all this stuff, and if I’ve gotten any of the details wrong in explaining the theory, let me know in the comments – happy to make corrections!

Let’s start by explaining the problem!

The problem

If you’ve not played Dicey Dungeons, here’s a crash course: it’s a deckbuilding RPG, where each enemy has a selection of equipment cards that do different things. Also, they roll dice! They then place those dice on the equipment to do damage, or cause various status effects, or heal, or shield themselves from damage, or lots of other things. Here’s a simple example of a tiny frog using a big sword and a little shield:

A more complicated example: this Handyman has a spanner, which allows it to add two dice together (so 3 + 2 would give you a single 5, and a 4 + 5 would give you a 6 and a 3). It also has a Hammer, which “shocks” the player if they use a six on it, and a Pea Shooter, which doesn’t do much damage, but which has a “countdown” which persists across turns.

One more important complication: there are status effects which change what you can do. The most important of these are “Shock”, which disables equipment at random until you unshock it by using an extra dice on it, or “Burn”, which sets your dice on fire. When your dice are on fire, you can still use them – but it’ll cost you 2 health points. Here’s what a clever Handyman does when I shock and burn all his equipment and dice:

There’s more to it than that, of course, but that’s basically the gist of it!

So, the problem: how do you make an AI that can figure out the best thing to do on it’s turn? How does it know which burning dice to extinguish, which dice to use for unshocking and which dice to save for important equipment?

What it used to do

For a long time, my AI in Dicey Dungeons just had one rule: It looked at all the equipment from left to right, figured out the best dice to use on it, and used it. This worked great, until it didn’t. So, I added more rules.

For example, I dealt with shocking by looking at the unshocked equipment, and deciding what dice I would want to use on it when it was unshocked, then marking that dice as “reserved” for later. I dealt with burning dice by just checking if I had enough health to extinguish them, and choosing whether or not to do it by random chance.

Rule after rule after rule to deal with everything I could think of, and ended up with an AI that sorta kinda worked! Actually, it’s amazing how well this hodge-podge of rules held together – the AI in Dicey Dungeons might not have always done the right thing, but it was definitely passable. At least, for a game that’s still a work in progress.

But over time, this system of adding more and more rules to the AI really started to break at the seams. People discovered consistent exploits to get the AI to do stupid things. With the right setup, one of the bosses could be tricked into never actually attacking you, for example. The more rules I added to try to fix things, the more weird things would happen, as rules started to conflict with other rules, and edge cases started to crop up.

Of course, one way to fix this was to just apply more rules – work through each problem one by one, and add a new if statement to catch it. But I think that would have just been kicking the problem further down the road. The limitation this system had was that it was only ever concerned with this question: “What is my next move?”. It could never look ahead, and figure out what might happen from a particular clever combination.

So, I decided to start over.

The classic solution

Look up AI stuff for games, and likely the first solution you’ll come across is a classic decision making algorithm called Minimax. Here’s a video that explains how it’s applied to designing a Chess AI:

Implementing Minimax works like this:

First, you create a lightweight, abstract version of your game, which has all the relevant information for a particular moment in time of the game. We’ll call this the Board. For Chess, this would be the current position of all the pieces. For Dicey Dungeons, it’s a list of dice, equipment, and status effects.

Next, you come up with a value function – a way to measure how well the game is going for a particular configuration of the game – i.e. for a particular board. For Chess, maybe a board where all the pieces are in their initial positions is worth 0 points. A board where you have captured an enemy Pawn is maybe worth 1 point – and maybe a board where you’ve lost one of your own Pawns is worth -1 points. A board where you have your opponent in checkmate is worth infinity points. Or something like that!

Then, from this abstract board. you simulate playing all the possible moves you can make, which gives you a new abstract board. Then, you simulate playing all the possible moves from those boards, and so on, for as many steps as you want. Here’s an excellent illustration of that from freecodecamp.org:

What we’re doing is creating a graph of all the possible moves both players can make, and using our value function to measure how the game is going.

Here’s where Dicey Dungeons splits from Minimax: Minimax comes from mathematical game theory, and it’s designed to figure out the best series of moves in a world where your opponent is trying to maximise their score. It’s so named because it’s about trying to minimise your loss when your opponent plays so to as to maximise their gain.

But for Dicey Dungeons? I actually don’t care what my opponent is doing. For the game to be fun, you just want the AI do make moves that make sense – to figure out the best way to play their dice on their equipment to make it a fair fight. In other words, all I care about is the Max, not the Min.

Which means: for the Dicey Dungeons AI to make a good move, all I need to do is create this graph of possible moves, and look for the board which has the best score – then make the moves that lead to that point.

A simple enemy turn

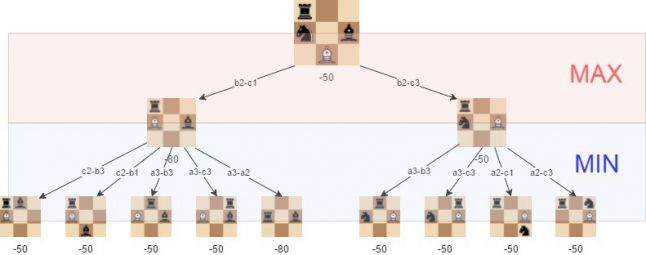

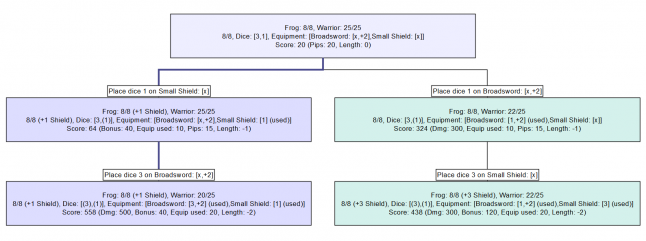

Ok, examples! Let’s look at this frog again! How does it decide what to do? How does it know that it’s chosen action is the best one?

It basically just has has two options. Place the 1 on the broadsword and the 3 on the shield, or do it the other way around. It obviously decides that it’s better off putting that 3 on the sword than the 1. But why? Well, because it looked at all the outcomes:

Place the 1 on the sword and you end up with a score of 438. Place the 3 on it, and you end up with a score of 558. Great, ok! Then, I get a better score by placing the 3 on the Sword, done.

Where’s that score coming from? Well, the Dicey Dungeons scoring system currently considers:

- Damage: The most important case – 100 points for every point of damage dealt.

- Poison: An important status effect that the AI considers almost as important as damage – 90 points for each poison.

- Inflicting other Status effects: Like Shock, Burn, Weaken, etc. Each one of these is worth 50 points.

- Bonus status effects: Inflicting yourself with positive status effects like Shield, etc, is worth 40 points each.

- Using equipment: Using any piece of equipment is worth 10 points – because if all else fails, the AI should just try to use everything.

- Reducing countdowns: Some equipment (like the Pea Shooter) just needs a total value of dice to activate. So, the AI gets 10 points for every countdown point it reduces.

- Dice Pips: The AI gets 5 points for every unused Dice Pip – so a 1 is worth 5, and a 6 is worth 30. This is intended to make the AI prefer not to use dice it doesn’t need to use, and does a lot to make its moves look more human like.

- Length: The AI loses 1 point per move, making it so that long moves have very slightly lower scores than short ones. This is so that if there are two moves that would otherwise have the same score, the AI will pick the shorter one.

- Healing: Worth just 1 point per health point healed, because while I want the AI to consider it in a tie break, I don’t want it to be preoccupied with it. Other things are always more important!

- Bonus score: Bonus score can be applied to any move, to trick the AI into doing something they might not otherwise decide to do. Used very sparingly.

Finally, there’s also two special cases – if the target of the attack is out of health, that’s worth a million points. If the AI is out of health, that’s worth minus a million points. These mean that the AI will never accidentally kill themselves (by extinguishing a dice when they have very low health, say), or never pass up a move that would kill the player.

These numbers aren’t perfect, for sure – take, for example, these currently open issues: #640, #642, #649 – but it actually doesn’t matter that much. Even roughly accurate numbers are enough to incentivise the AI to more or less do the right thing.

Harder enemy turns

The frog case is simple enough that even my shoddy code can figure out every single possibility in 0.017 seconds. But, then things get a bit more complicated. Let’s look at that Handyman again.

It’s decision tree is, uh, a little more complicated:

Unfortunately, even relatively simple cases explode in complexity pretty quickly. In this case, we end up with 2,670 nodes on our decision graph to explore, which takes quite a bit longer to figure out than the frog did – maybe as much as a second or two.

A lot of this is combinatorial complexity – for example, it doesn’t matter which of the 2s we use to unshock the equipment initially, this algorithm considers them as two separate decisions, and creates a whole tree of branching decisions for both. This ends up with a branch that’s a totally unnecessary duplicate. The are similar combination problems with deciding which dice to extinguish, which equipment to unshock, what dice to use in what order.

But even spotting unnecessary branches like this and optimising them (which I’ve been doing to some extent), there is always going to be a point where the complexity of the possible permutations of decisions leads to huge, slow decision trees that take forever to figure out. So, that’s one major problem with this approach. Here’s another:

This important piece of equipment (and things like it) cause a problem for the AI, because they have an uncertain outcome. If I put a six in this, maybe I’ll get a five and a one, or I might get a four and two, or maybe I’ll get two threes. I won’t know until I do it, so it’s really hard to make a plan that takes this into account.

Thankfully, there is a good solution to both of these problems that Dicey Dungeons uses!

The modern solution

Monte Carlo Tree Search (or MCTS, for short) is a probabilistic decision making algorithm. Here is a, uh, slightly odd video which nevertheless explains the idea behind Monte Carlo based decision making really well:

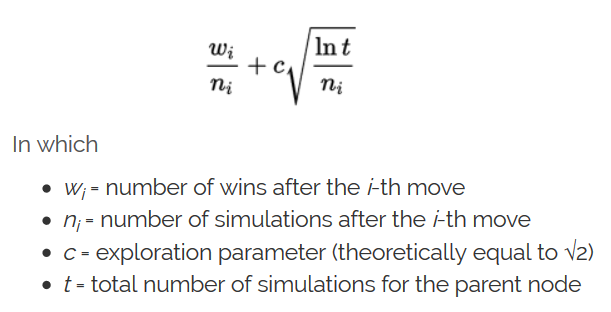

Basically, instead of graphing out every single possible move we can make, MCTS works by trying out sequences of random moves, and then keeping track of the ones that went the best. It can magically decide which branches of our decision tree are the “most promising” thanks to a formula called the Upper Confidence Bound algorithm:

That formula, by the way, is from this very helpful article on Monte Carlo Tree Searches. Don’t ask me how it works!

The wonderful thing about MCTS is that it can usually find the best decision without having to brute force everything, and you can apply it to the same abstract board/move simulation system as minimax. So, you can kinda do both. Which is what I’ve ended up doing for Dicey Dungeons. First, it tries to do an exhaustive expansion of the decision tree, which usually doesn’t take very long and leads to the best outcome – but if that’s looking too big, it falls back to using MCTS.

MCTS has two really cool properties that make it great for Dicey Dungeons:

- One – it’s great at dealing with uncertainty. Because it’s running over and over again, aggregating data from each run, I just let it simulate uncertain moves like using a lockpick naturally, and over repeated runs, it’ll come up with a pretty good range of scores of how well that move will work out.

- Two – it can give me a partial solution. You can basically do as many simulations as you like with MCTS. In fact, in theory, if you let it run forever, it should converge on exactly the same result as Minimax. More to the point for me, though – I can use MCTS to generally get a good decision out of a limited amount of thinking time. The more searches you do, the better the “decision” you’ll find – but for Dicey Dungeons, it’s often good enough to just do a few hundred searches, which only takes a fraction of a second.

Some cool tangents

So, that’s how the enemies in Dicey Dungeons decide how to kill you! I look forward to introducing this in the upcoming version v0.15 of the game!

Here are some tangential thoughts that I don’t really know where to put:

Those graphs I’ve been showing gifs of? Including this one on twitter:

Sure, the neighbours seem to be really enjoying their party, but the REAL fun is going on here: spent the evening hacking together a GraphML exporter for Dicey Dungeons’ new AI! Now I can explore enemy moves and actually see what’s going on step-by-step! #screenshotsaturdaypic.twitter.com/EeCwUz2NBK

— Terry (@terrycavanagh) November 25, 2018

I created these by writing an exporter for GraphML, which is an open source graph file format that can be read with many different tools. (I’ve been using yEd, which is great and which I can recommend a lot.)

Also! Part of making this all work was figuring out how to let the AI simulate moves, which was a big puzzle in and of itself. So, I ended up implementing an action scripting system. Now, when you use a piece of equipment, it runs these tiny little scripts that look like this:

These little scripts are executed by hscript, a haxe based expression parser and interpreter. This was definitely kind of a pain to implement, but the payoff is great: it makes the game super, super modable. I’m hoping that when this game finally comes out, people will be able to use this system to design their own equipment that can do basically any cool thing they can think up. And, even better, because the AI is smart enough to evaluate any action you give it, enemies will be able to figure out how to do whatever weird modded equipment you give it!

Thanks for reading! Happy to answer any questions or to clarify any of this in the comments below!

(And, finally, if you’re interested in playing Dicey Dungeons, you can get alpha access on itch.io right now, or if you prefer, wishlist us on steam, which will send you a little reminder when the game comes out.)