· 8 min read

Three ways to reduce the costs of your HTTP(S) API on AWS

Magnus Henoch

Senior Software Developer

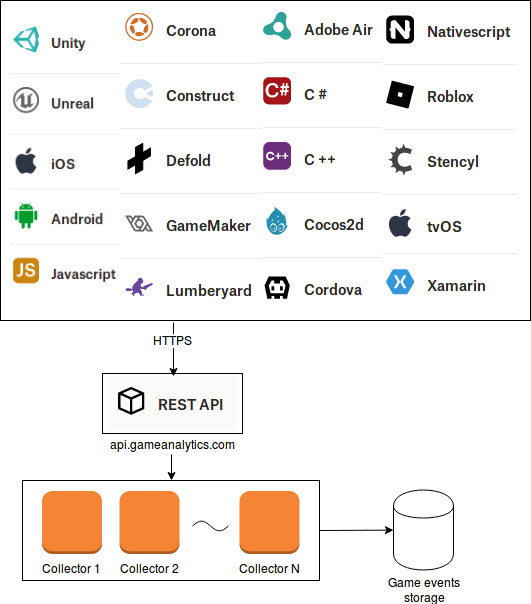

Here at GameAnalytics, we receive, store and process game events from 1.2 billion monthly players in nearly 90,000 games. These events all pass through a system we call the data collection API, which forwards the events to other internal systems so that we eventually end up with statistics and graphs on a dashboard, displaying user activity, game revenue, and more.

The data collection API is fairly simple in principle: games send events to us as JSON objects through HTTP POST requests, and we send a short response and take the event from there. Clients either use one of our SDKs or invoke our REST API directly.

We get approximately five billion requests per day, each typically containing two or three events for a total of a few kilobytes. The response is a simple HTTP 200 “OK” response with a small bit of JSON confirming that the events were received. The general traffic pattern is a high number of relatively short-lived connections: clients send just over two HTTP requests per connection on average.

So what would you guess is the greatest cost associated with running this system on AWS, with a fleet of EC2 instances behind a load balancer?

We wouldn’t have guessed that the greatest part of the cost is for data transfer out. Data transfer in from the Internet is free, while data transfer to the Internet is charged between 5 and 9 cents per gigabyte.

So we set out to do something about this and see if we could save some money here. We were a bit surprised that we couldn’t find anything written about what to do in this scenario – surely our use case is not entirely uncommon? – so hopefully this will be useful to someone in a similar situation.

1. Reduce HTTP headers

Before these changes, a response from this system would look like this, for a total of 333 bytes:

HTTP/1.1 200 OK Connection: Keep-Alive Content-Length: 15 Content-Type: application/json accept-encoding: gzip Access-Control-Allow-Origin: * X-GA-Service: collect Access-Control-Allow-Methods: GET, POST, OPTIONS Access-Control-Allow-Headers: Authorization, X-Requested-With, Content-Type, Content-Encoding {"status":"ok"}

(Remember that the line breaks are CRLF, and thus count as two bytes each.)

Since we would send this five billion times per day, every byte we could shave off would save five gigabytes of outgoing data, for a saving of 25 cents per day per byte removed.

Much of this could simply be removed:

- The Access-Control-Allow-Methods and Access-Control-Allow-Headers response headers are CORS headers, but they’re only required in responses to preflight requests using the OPTIONS method, so they are superfluous in responses to POST requests.

- The Access-Control-Allow-Origin is still required, but only when the request is a CORS request, which we can determine by checking for the Origin request header. For any request not sent by a web browser, we can just omit it.

- The Accept-Encoding header is actually a request header; including it in the response has no meaning.

- Finally, the X-GA-Service header was once used for debugging, but we don’t use it anymore, so it can be dropped as well.

So for the vast majority of requests, the response would look like this:

HTTP/1.1 200 OK Connection: Keep-Alive Content-Length: 15 Content-Type: application/json {"status":"ok"}

Sending 109 bytes instead of 333 means saving $56 per day, or a bit over $1,500 per month.

So it stands to reason that by reducing data sent to a third, costs for data transfer should drop by 66%, right? Well, costs dropped, but only by 12%. That was a bit underwhelming.

2. Also reduce TLS handshakes

Obviously, before we can send those 109 bytes of HTTP response, we need to establish a TLS session, by exchanging a number of messages collectively known as a “TLS handshake”. We made a request to our service while capturing network traffic with Wireshark, and discovered that it sends out 5433 bytes during this process, the largest part of which is made up of the certificate chain, taking up 4920 bytes.

So reducing the HTTP response, while important, doesn’t have as much impact as reducing TLS handshake transfer size. But how would we do that?

One thing that reduces handshake transfer size is TLS session resumption. Basically, when a client connects to the service for the second time, it can ask the server to resume the previous TLS session instead of starting a new one, meaning that it doesn’t have to send the certificate again. By looking at access logs, we found that 11% of requests were using a reused TLS session. However, we have a very diverse set of clients that we don’t have much control over, and we also couldn’t find any settings for the AWS Application Load Balancer for session cache size or similar, so there isn’t really anything we can do to affect this.

That leaves reducing the number of handshakes required by reducing the number of connections that the clients need to establish. The default setting for AWS load balancers is to close idle connections after 60 seconds, but it seems to be beneficial to raise this to 10 minutes. This reduced data transfer costs by an additional 8%.

3. Check your certificates

Does a certificate chain really need to take up 4920 bytes?

We initially used a certificate from AWS Certificate Manager. It’s very convenient: there is no need to copy files anywhere, the certificate renews itself automatically, and it’s free. The downside is that multiple intermediate certificates are required to establish a trust chain to a root certificate:

- The gameanalytics.com certificate itself, 1488 bytes

- An intermediate certificate for “Amazon Server CA 1B”, 1101 bytes

- An intermediate certificate for “Amazon Root CA 1”, 1174 bytes

- “Starfield Services Root Certificate Authority”, 1145 bytes (despite the name, this is an intermediate certificate, not a root certificate)

That sums up to 4908 bytes, but each certificate has a 3-byte length field, so the TLS handshake certificate message contains 4920 bytes of certificate data.

So in order to reduce the amount of data we’d need to send in each handshake, we bought a certificate from Digicert instead. The chain is much shorter:

- The gameanalytics.com certificate itself, 1585 bytes

- “Digicert SHA2 Secure Server CA”, 1176 bytes

All in all 2767 bytes.

So given that the clients establish approximately two billion connections per day, we’d expect to save four terabytes of outgoing data every day. The actual savings were closer to three terabytes, but this still reduced data transfer costs for a typical day by almost $200.

And further cost reduction opportunities

We’re probably in diminishing returns territory already, but there are some things we haven’t mentioned above:

- If the clients use HTTP/2, data transfer decreases further, as response headers are compressed. Approximately 4% of our incoming requests are made using HTTP/2, but we don’t really have any way of increasing this percentage. In AWS, Application Load Balancers (ALBs) support HTTP/2 without any configuration required, whereas “classic” load balancers don’t support it at all.

- We’re currently using an RSA certificate with a 2048-bit public key. We could try switching to an ECC certificate with a 256-bit key instead — presumably most or all clients are compatible already.

- There is room for decreasing certificate size further. We currently use a wildcard certificate with two subject alternative names; we could save a few bytes by using a dedicated certificate for the one domain name this service uses.

- Some of the clients use more than one of our APIs. Currently, they are served under different domain names, but by serving them under the same domain name and using ALB listener rules to route requests, the client would only need to establish one TCP connection and TLS session instead of two, thereby reducing the number of TLS handshakes required.

- If we’re prepared to introduce an incompatible API change, we could start returning “204 No Content” responses to clients. A “204 No Content” response by definition has no response body, so we could drop the {“status”:”ok”} response as well as the Content-Type and Content-Length headers, saving an additional 70 bytes per response, or approximately $17 per day.

Also, the certificate contains lengthy URLs for CRL download locations and OCSP responders, 164 bytes in total. While these are required security features, it could be a selling point for a Certificate Authority to use URLs that are as short as possible. Starfield Technologies is setting a good example here: it uses the host names x.ss2.us and o.ss2.us for these purposes.

Here are some upcoming TLS extensions that would also reduce the size of handshakes:

- There is an RFC draft for TLS certificate compression.

- There is also RFC 7924, “Cached Information Extension”, which means that the server doesn’t need to send its certificate chain if the client has seen it earlier. However, this doesn’t seem to have been implemented in any TLS client library, and most likely not supported by AWS load balancers.

So that’s what we’ve learned so far. Remember to check your certificates as they might be bigger than required, increase the idle connection timeout as it’s cheaper to keep an established connection open, and trim your HTTP response headers.

Do you have any thoughts or insights? Let us know by tweeting us here.

P.S. We’re hiring!

Are you a savvy developer looking to work in the cutting-edge of the tech industry? Brilliant – we’re on the lookout for ambitious, bright, and enthusiastic minds to join our growing engineering team. Visit the GameAnalytics careers page to see the benefits we offer and all available roles. And if you don’t see an open position that you’re interested in, drop us an email with your details – we’re more than happy to chat!