· 13 min read

Personalizing Offers for In-App Monetization – Lessons from Crazy Panda

Ivan Kozyev

Head of Analytics at Crazy Panda

At Crazy Panda we publish loads of different types of titles – like World Poker Club (the biggest poker game in the Commonwealth of Independent States), Stellar Age (a mid-core mobile MMO strategy game) and Household (a social networks-based farm game). These games might look pretty different, but they all have one thing in common – in-app purchases monetization, and personalized offers. In fact, at Crazy Panda, personalized offers are between 50% and 80% of our revenue, depending on the product.

So we’ve spent a lot of time making sure we get these right. And we’d like to pass on a bit of what we’ve learned to you. We think you can use this for most, if not all, games with in-app monetization.

Before we begin

Let’s start by nailing down a few technical terms which you may or may not already know. We’ll be using these throughout this post.

- Bank: the place where all players make in-app purchases, AKA the game’s store.

- Bank item: things in the bank that people can buy.

- Offer: something a bit special that’s different from regular bank items. These are generally personalized for the player in some way, like items that only appear when they achieve something in the game.

- Personalization: what this post is all about – creating offers in a way that fits with your users while also maximizing their lifetime value (LTV).

So why is personalization important? We could just sell every valuable thing in the game for $1 which would probably suit almost every player. But will it maximize their LTV? Nope.

The different parts of an offer

Before we get into how to do that, let’s take a look at the things we can change in our offers.

- The content: What the offer is. What makes it valuable to the player? Why would they want it? This completely depends on your game’s content, so we won’t be talking too much about it in this post. That doesn’t mean you should underestimate its importance though, so make sure you spend time thinking about it.

- The cost: To maximize LTV we want this to be as high as possible, without being so expensive that people don’t want to pay it.

- Discounts or bonuses: These are quite similar, but let’s assume that giving people bonuses increases an offer’s value to them. And don’t forget that we don’t want to give everything at once.

- Lifetime: How long an offer will exist for someone. This has to be long enough for the user to evaluate something and decide whether or not to buy it, but not too long that it loses the ability to change if it doesn’t fit.

- Cooldown: The time between one offer ending and another one starting.

- Pop-up: When and how you show your offers to users. The most common way of doing this is to show an offer once at the start of the user’s session, then leave it with some kind of timer (as offers can and should expire), like a widget in the bank or on the main screen. Pop-up is a really powerful feature, but it’s easy to overuse, so tread carefully.

Every one of these is important. And if you get one of them wrong, it can be really bad news for your game.

Personalization – the basics

A little while ago one of our Crazy Panda designers said: ‘Hey, I’ve been reading about pricing tricks. Apparently we should be putting bigger bank items on the left because people read from left to right.’ We thought this sounded really interesting and decided to give it a try with an AB test. So we gave half of the audience an old bank and the other half a new, inverted one. Which one do you think was more successful?

The answer might surprise you. People actually spent the same amount of money in both banks (and we were left with one upset designer). Usually that would be the end of the story. But we weren’t ready to give up yet. So we split our payers into two groups – ‘whales’ and ‘others’, and ran our AB test again.

The results

The ‘others’ group showed a significant decrease in payments per user for a test group with the same average revenue per payment. And in the ‘whales’ group there was a significant increase in average revenue per payment, but no decrease in the number of payments per user. So we decided to invert the bank for users who match the whales’ criteria.

This is the start of personalization – splitting your audience into groups with similar habits. Of course, in an ideal world we could create a group for every player to give them the very best experience. But we have to do this on a much bigger scale.

How to segment your users

All products are unique. So there’s no catch-all technique for user segmentation (sorry!). But there are two obvious groups for pretty much every app – payers and non-payers. You can then split these into groups you want to establish long-term payment relationships with, and groups for regular payers. And don’t forget churning players, who miss their expected payment time.

Once you’ve defined these groups you can split them once again. And again. Add in-game behavior dependence, spice it up with different offers, and you’ve monetized your app.

A note on AB testing

I’ve already mentioned AB testing above, but just in case you’re not familiar with it, it’s when we split an app’s audience into two groups – a ‘control group’ and a ‘test group’. We then make changes to the app the test group are using. After some time we compare the two groups to see if the changes we made were positive or not.

Sounds simple, right? But it’s really easy to get wrong. Here are some of the most common errors:

- not starting with a hypothesis

- stopping the test too early

- stopping the test too late

- measuring the wrong metric

- not taking into account testing multiple times.

The best tip I can give you when you’re AB testing is be patient and precise. It takes time to learn how to avoid the mistakes I’ve listed above, but it will pay off. And remember that testing isn’t just about finding which change is best – it’s also about figuring out the impact it has. This can help us understand which direction all our games should be moving in.

Personalization – advanced techniques

Technique 1: small vs big offers

Let’s go back to the user segmentation groups and look at non-payers. Imagine that we’re thinking of bringing out two offers:

- one with a smaller price

- one with a bigger price and more content.

We know that we’ll get better conversion rates with the first offer. But don’t dismiss the second one – in fact, there will be more high-value payers for this bigger offer. Look at the average revenue per paying user (ARPPU), which tells us how much each payer spends in the first 30 days.

For the smaller offer, we achieved a conversion rate of 5.4% and ARPPU of $36. And for the bigger offer, we hit an 3.8%, and $59. So you’ll see that the bigger offer actually has a much better conversion rate.

This doesn’t mean you should automatically go with offer number 2. It depends on the title. For example, extensive testing for World Poker Club showed that the bigger offer was better. But for Stellar Age, the smaller one was more effective.

So which one should you choose? In fact, you might not need to choose at all. What about showing your players BOTH offers at the same time? We gave this a try, although we weren’t actually expecting much of a change – we thought that most payers would shift to the smaller offer and the increase in conversion wouldn’t compensate for the fall in ARPPU. But we were wrong. Showing the double offer gave us the conversion of the smaller offer with the same amount of higher-value payers as the bigger one.

This is a very easy and profitable trick. But you’ve got to get the design right – we tried giving just two separate offers and it worked out about 25% less effective – we lost almost all of the increase. But this might not be the case with your app, so it may well be worth giving it a try.

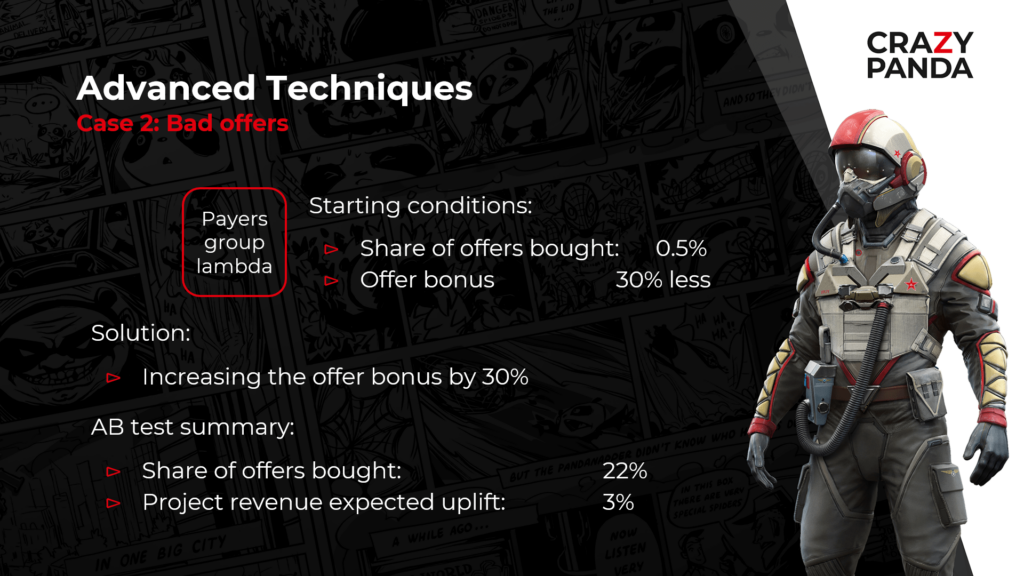

Technique 2: Bad offers

Basically these are offers that users never buy. So it seems like we should just get rid of them, right? Or could it be useful to hang on to them…? We decided to test that theory with, you’ve guessed it, an AB test.

We used a small group of regular payers who only occasionally bought offers, and increased the value of the ‘bad’ offer by 30%. And people bought it – revenue increased by 3%. Great, right?

Actually, no. At the end of the month, when we looked at the increases we expected to make, we’d actually suffered a 4% loss. So something had gone really wrong. After investigating we worked out that when the first offer for our small group of regular payers was too good, while previously they’d waited and bought the second, bigger one, improving the first offer meant we’d now shifted their payments to the smaller ones.

Bad offers make your good offers look better

So it turns out that ‘bad’ offers are a must in a complex system. They show users the value of better offers, making them more attractive. And sometimes they even lead users to pay earlier or a bigger price. Also, they stop us constantly increasing offer bonuses which is good for players, as it usually rises a power range between older and newer users (essentially meaning we don’t have to keep increasing the value of these items for payers who have played our game for a long time).

Some of the testing we did for this technique included:

- increasing the target offer’s price to match bigger ones

- decreasing the next offer’s price to match smaller ones

- increasing the next offer’s bonus (which effectively led us to the same situation as before the test, but with an offer with a bigger bonus available to users)

- changing the context of the target offer.

Technique 3: Machine learning

Machine learning personalization is already everywhere. So I won’t talk too much about the technicalities – instead I’m going to focus on how we used it to improve our personalized offer system. Before I do that it’s worth pointing out that only the third machine learning model we developed actually increased our revenue. This might just be us though – maybe you’ll get better results.

Making our model

We started with a goal for our model. It had to be measurable and simple, and have an obvious way to increase metrics. ‘Increase revenue by 25%’ is a bad goal for example, because the machine-learning model itself can’t just print money. ‘Predict what type of currency users want in the next payment, so we can adapt our offer on the fly’ is a good one. It’s measurable and shows we understand how we can use the model’s predictions.

Next we needed historical data. And while we could have used techniques like unsupervised learning, for us, offers make more than half (and for some projects 80%) of game revenue. And teaching such a model can be expensive. Also, keep in mind that if you don’t have something in your data, the model won’t catch it.

The next step was the hardest one – we had a data scientist prepare data. Trust me, this will take up 80% of the time you put aside to develop a machine learning model.

Once we were done with the data, the fun part began. We fitted and researched various algorithms. This was very interesting, challenging and creative.

After all this we finally had a model which could predict the size of the next payment for a player. So we’re done, right? Wrong. We now had to implement the model into our system. And it had to be fast enough to deliver offers in time (less than five seconds to collect data and make a prediction). Don’t forget about edge cases – what about users with zero payments? Or without data?

The results

I’m pleased to say the model surpassed our expectations. Great. But there was one last thing we needed to do – test it. As I mentioned earlier, our first two models gave us great metrics and were very reliable, but the impact of their implementation was mediocre at best. The third model was a success though. We were able to predict whether users would want hard or soft currency at certain points in time.

Here are some of the things we learned while building our successful model.

- Our biggest problem was setting the right target. Before we developed the model all our offers only had hard currency in their content. So we couldn’t just throw our data into some algorithm and get a result. To solve this we created a new target – if a user bought hard currency in the bank or through an offer and in a short period of time converted a significant amount of it into soft currency, then we marked them as different types of currency.

- The key idea here is to use all in-game behavior and user states to predict soft currency deficits. We couldn’t easily solve this in an analytical way, which is why the machine-learning model is great here.

- One of the biggest challenges was to make the model real-time – this could be a whole blog in its own right, so I won’t go into it here.

- We chose CatBoost as our algorithm because it’s proven to be about four times faster than Light GBM. But tuning features was a pain.

Developing and implementing this model definitely wasn’t easy. But it was literally the first payment feature that got us lots of positive feedback from our players.

Like everything, machine learning takes time to master. And in the right circumstances, it can be a powerful tool. But you need to define the right goals and only use it for complex, but clear, tasks. You should also be aware of the external environment – if you never sold something in your game, then the model won’t work with it. So, for example, if you don’t have any payments of more than $10 then machine learning won’t expect them.

And finally…

Personalization is a natural fit for in-app monetization. And if you take only one point from this post about it, it should be that you need to test, test and test again – even if you think something is guaranteed to be successful. We ran and closed more than 150 AB tests just for our in-app World Poker Club monetization system last year.

Remember that sometimes these tests won’t be successful. But that’s okay. Learn from them and move on. In 2019, only 42% of our tests were successful. And only 14% of them gave us our 77% increase in users’ LTV.

Fancy working for Crazy Panda?

At Crazy Panda, we’re developing very different games in different genres and are always open to experience exchanges and networking. Check out our website to see what we’re working on next, or get in touch if you fancy a chat.